(The Hill) – Child pornography generated by artificial intelligence (AI) could overwhelm an already inundated reporting system for online child sexual abuse material, a new report from the Stanford Internet Observatory found.

The CyberTipline, which is run by the National Center for Missing and Exploited Children (NCMEC), processes and shares reports of child sexual abuse material with relevant law enforcement for further investigation.

Open-source generative AI models that can be retrained to produce the material “threaten to flood the CyberTipline and downstream law enforcement with millions of new images,” according to the report.

“One million unique images reported due to the AI generation of [child sexual abuse material] would be unmanageable with NCMEC’s current technology and procedures,” the report said.

The full article is available at ozarksfirst.com.

(Story by Julia Shapero, The Hill, found at ozarksfirst.com)

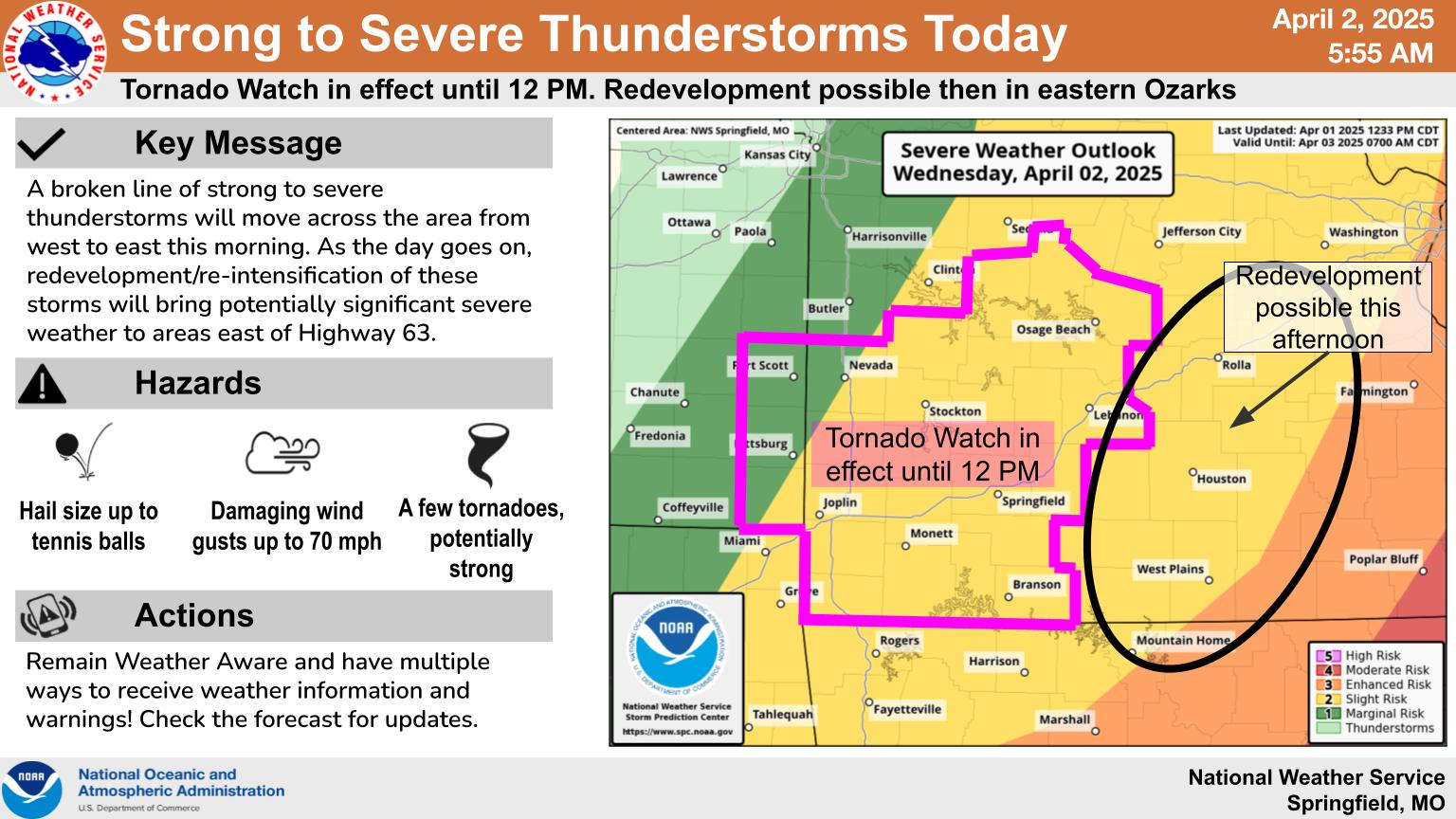

Wednesday Storms, Thursday Rain

Wednesday Storms, Thursday Rain

Eureka Springs Police Officers Save Lives in Fire

Eureka Springs Police Officers Save Lives in Fire

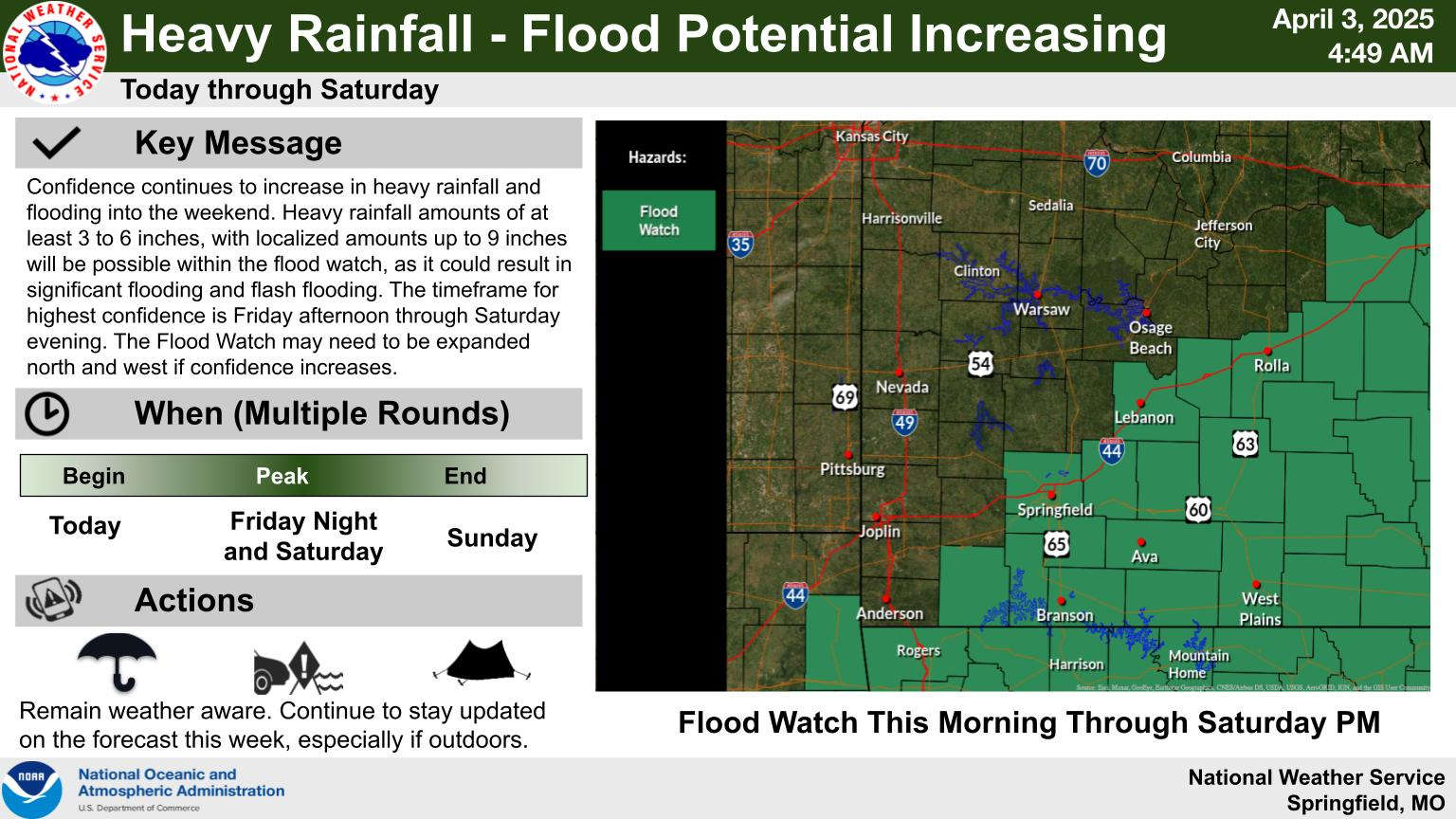

Active Weather for the Week, Weekend

Active Weather for the Week, Weekend

Sides Pleads Not Guilty in Taney County Case

Sides Pleads Not Guilty in Taney County Case